In the wake of recent acts of extreme brutality and injustice and mass protests, we’re examining our role in perpetuating systems of inequality. We are responsible for our impact as a tech company, as a news reader, and, acutely, as a developer of machine learning algorithms for Feedly AI.

Artificial intelligence and machine learning are powerful tools that allow Feedly AI to read thousands of articles published every day and prioritize a top selection based on the topics, organizations, and trends that matter to you. However, if not designed intentionally, these tools run the risk of reinforcing harmful cultural biases.

Bias sneaks into machine learning algorithms by way of incomplete or imbalanced training data. Without realizing it, we miss or overrepresent certain variables and the algorithm learns the wrong information, often with dangerous outcomes.

In the case of Feedly AI, we risk introducing bias when teaching it broad topics such as “leadership.” Feedly AI learns these topics by finding common themes in sets of articles curated by the Feedly team. For the topic “leadership,” Feedly AI might pick out themes like strong management skills and building a supportive team culture. However, if more articles about male leaders than female are published or added to the training set, Feedly AI might also learn that being male is a quality of leadership. Tracking which themes Feedly AI learns is an essential part of topic modeling that helps prevent us from reinforcing our biases or those of the article author or publisher.

It’s on us as developers to be deliberate and transparent about the way we account for bias in our training process. With that in mind, we’re excited to share what we’re working on to reduce bias at the most crucial stage: the training data

Break down silos

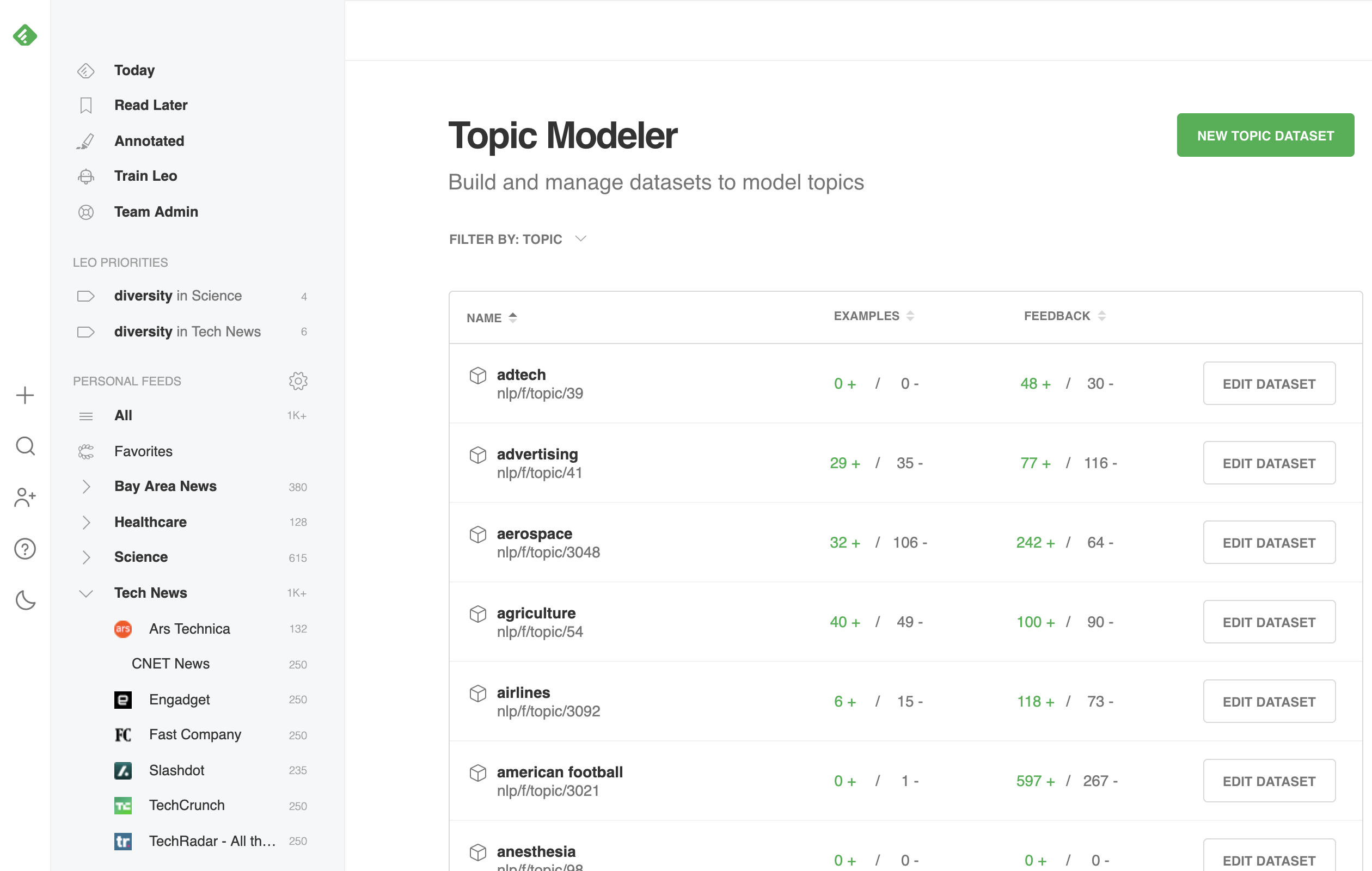

Collaboration among folks from diverse backgrounds helps us account for our blind spots. However, to make that collaboration possible, we need an accessible tool. The new topic modeler is that tool — designed so that anyone in the Feedly community can help curate a dataset to train Feedly AI about topics they’re passionate about.

The topic modeler takes advantage of the Feedly UI we know and love to allow multiple users to search for articles for the training set and review Feedly AI’s learning progress. Our goal is to connect with experts in a variety of fields to build robust topics that represent our entire community — not just the engineering team.

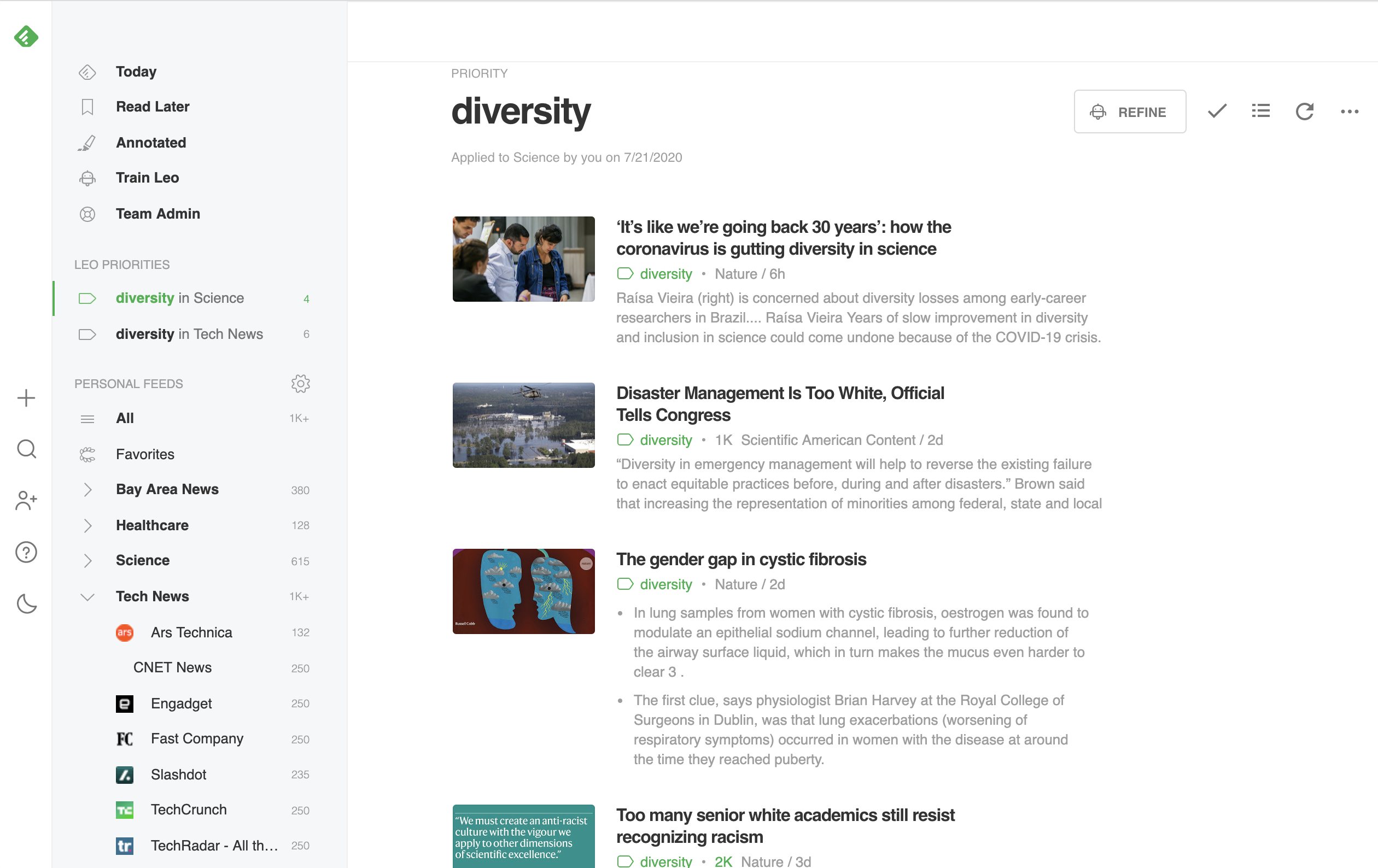

Put to the test: the diversity topic

Recently, two Feedly team members with no machine learning experience and who are interested in diversity issues road tested the new tool to redesign our diversity topic. The result is a topic that is rich and nuanced: rather than focusing only on the buzzword “diversity,” Feedly AI will be looking for thousands of related keywords, including representation, inclusion, bias, discrimination, equal rights, and intersectionality. Now you can train Feedly AI to track diversity and inclusion progress in your industry and find essential information for how to build and maintain inclusive work cultures and hiring practices.

Feedly AI continuously learns

Topic modeling is not the only way to collaborate. Any Feedly user can help Feedly AI learn. When Feedly AI is wrong, you can use the ‘Less Like This’ down arrow button to let it know that an article it’s prioritized isn’t about a particular subject.

Feedly AI will also seek your feedback occasionally via a prompt at the top of an article. If you see “Is this article about [topic]?,” let it know! Your feedback gets incorporated into Feedly AI’s training set to fill in any gaps we missed and strengthen his understanding.

Join the movement

Beyond in-app feedback, feel free to reach out via email or join the Feedly Community Slack channel, especially if you have a topic for Feedly AI to learn about. This is the tip of the iceberg when it comes to addressing and dismantling systemic bias. We take our role as content mediators seriously and know that we are indebted to those who have fought for so long to bring these issues to our attention. Feedly AI is listening and learning.